The current state of data engineering offers a plethora of options in the market, which can be challenging when selecting the right tool We are approaching a period where the traditional boundaries between between databases, datalakes, and data warehouses are overlapping. As always, it is important to think about what is the business case, then do a technology selection afterwards.

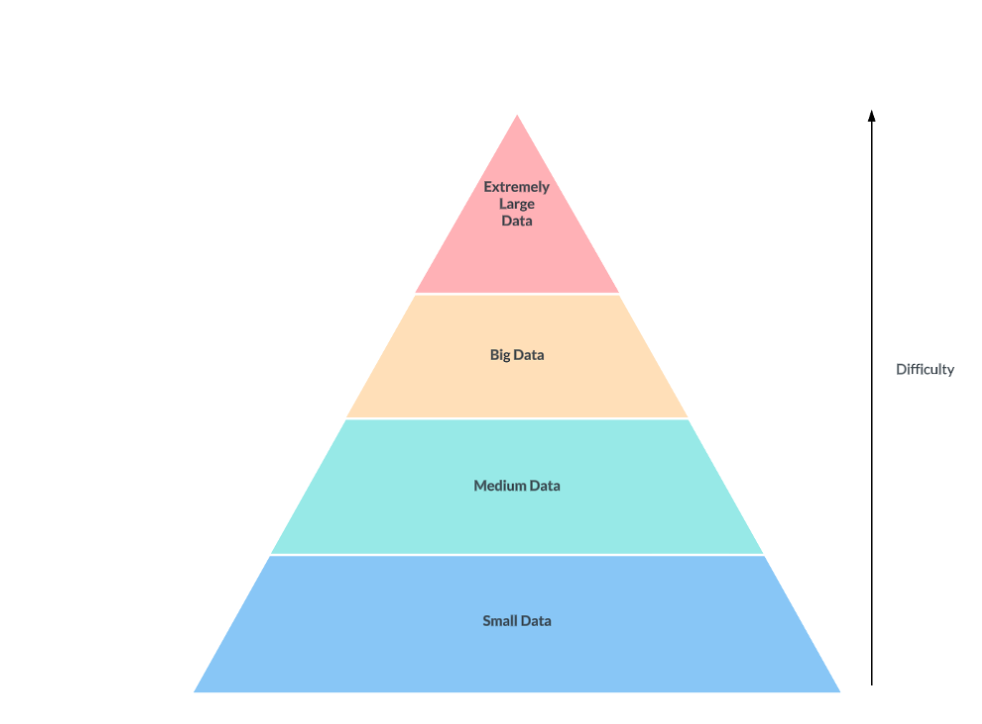

This diagram is simple, but merits some discussion.

Most companies in the small and medium data fields can get away with simpler architectures with a standard database powering their business applications. However it is when you get into big data and extremely large data do you want to start looking at more advanced platforms.

The Open Source Table Format Wars Revisited

A growing agreement is forming around the terminology used for Open Table Formats (OTF), also known as Open Source Table Formats (OSTF). These formats are particularly beneficial in scenarios involving big data or extremely large datasets, similar to those managed by companies like Uber and Netflix. Currently, there are three major contenders in the OTF space.

| Platform | Link | Paid Provider |

|---|---|---|

| Apache Hudi | https://hudi.apache | https://onehouse.ai/ |

| Apache Iceberg | https://iceberg.apache.org/ | https://tabular.io/ |

| Databricks | https://docs.databricks.com/en/delta/index.html | Via hyperscaler |

Several announcements from AWS recently, lead me to believe of some more support of Apache Iceberg into the AWS ecosystem

AWS Glue Data Catalog now supports automatic compaction of Apache Iceberg tables

Every datalake eventually suffers from a small file problem. What this means is if you have too many files in a given S3 partition (aka file path), performance degrades substantially. To alleviate this, compaction jobs are run to merge files to bigger files to improve performance. In managed paid platforms, this is done automatically for you, but in the open source platforms, developers are on the hook in needing to do this.

I was surprised to read that now if you use Apache Iceberg tables, developers no long have to deal with compaction jobs. Now to the second announcement:

Amazon Redshift announces general availability of support for Apache Iceberg

If you are using Amazon Redshift, you can do federated queries without needing to go through the hassle of manually mounting data catalogs.

In this video, you can watch Amazon talk about Iceberg explicitly in their AWS storage:session from re:Invent.

This generally leads me to believe that Apache Iceberg probably will be more integrated into the Amazon ecosystem in the near future.

Apache Hudi

Apache Hudi recently released version 0.14.0 which has some major changes such as Record Level Indexing

https://hudi.apache.org/releases/release-0.14.0/

One Table

Another kind of weird development which was announced right before Re:invent was the announcement of OneTable,

Microsoft, the Hudi team, and the Databricks team got together to create a new standard that serves as an abstraction layer on top of an OTF. This is odd to me, because not too many organizations have these data stacks concurrently deployed.

However probably in the next couple years as abstraction layers get created on top of OTFs, this will be something to watch.

Amazon S3 Express One Zone Storage Class

Probably one of the most important but probably buried news from re:Invent was the announcement of Amazon S3 Express One Zone

https://aws.amazon.com/s3/storage-classes/express-one-zone/

With this, we can now have single digit millisecond access to data information to S3, which leads to a weird question of datalakes encroaching onto database territory if they now can meet higher SLAs. However there are some caveats with this as there is limited region availability, and it is in one zone so think about your disaster recovery requirements. This is one feature I would definitely watch.

Zero ETL Trends

Zero ETL is the ability for behind the scenes replication for Aurora, RDS, and Dynamo to replicate to Redshift. If you have a use case where Slowly Changing Dimensions (SCD) Type 1 is acceptable, these are all worth taking a look at. From my understanding, when replication occurs, there is no connection penalty to your Redshift cluster.

https://aws.amazon.com/about-aws/whats-new/2023/11/amazon-dynamodb-zero-etl-integration-redshift/

Amazon OpenSearch Service zero-ETL integration with Amazon S3 preview now available

https://aws.amazon.com/about-aws/whats-new/2023/11/amazon-opensearch-zero-etl-integration-s3-preview/

AWS announces Amazon DynamoDB zero-ETL integration with Amazon OpenSearch Service

https://aws.amazon.com/about-aws/whats-new/2023/11/amazon-dynamodb-zero-etl-integration-amazon-opensearch-service/

AWS CloudTrail Lake data now available for zero-ETL analysis in Amazon Athena

https://aws.amazon.com/about-aws/whats-new/2023/11/aws-cloudtrail-lake-zero-etl-anlysis-athena/

Spark/Glue/EMR Announcements

Glue Serverless Spark UI

Now it is way easier to debug glue jobs as the Spark UI doesn’t have to manually be provisioned.

Glue native connectors: Teradata, SAP HANA, Azure SQL, Azure Cosmos DB, Vertica, and MongoDB

AWS Glue announces entity-level actions to manage sensitive data

https://aws.amazon.com/about-aws/whats-new/2023/11/aws-glue-entity-level-actions-sensitive-data/

Glue now supports Gitlab and Bitbucket

Trusted identity propagation

Propagate oauth 2.0 credentials to EMR

https://docs.aws.amazon.com/singlesignon/latest/userguide/trustedidentitypropagation-overview.html

Databases

Announcing Amazon Aurora Limitless Database

https://aws.amazon.com/about-aws/whats-new/2023/11/amazon-aurora-limitless-database/

Conclusion

It is exciting to see the OTF ecosystem evolve. Apache Hudi is still a great and mature option, with Apache Iceberg now being more integrated with the AWS ecosystem.

Zero ETL has the potential to save your organization a ton of time if your data sources are supported by it.

Something to consider is that major shifts in data engineering occur every couple of months, so keep an eye on new developments, as they can have profound impacts on enterprise data strategies and operations.